Table of Contents

Table of Contents  Watch What I Do

Watch What I Do

In his classic 1983 article, Ben Shneiderman introduced the concept of a "direct manipulation" interface, in which objects on the screen can be pointed to and manipulated using a mouse and keyboard [Shneiderman 83]. This concept was further studied in other articles [Hutchins 86]. With the advent of the Apple Macintosh in 1984, this style of interface became more popular, and now is widely accepted. However, there are some well-recognized limitations of conventional direct manipulation interfaces. For example, it is common in Unix for users to write parameterized macros ("shell scripts") that perform common and repetitive tasks, but the current utilities that do this are quite limited in the Macintosh Finder and other "Visual Shells." Direct manipulation interfaces also do not provide convenient mechanisms for expressing abstractions and generalizations, such as "all the objects of type y" or "do command z if the disk is almost full." As a result, experienced users of direct manipulation interfaces find that complex, higher-level tasks that occur commonly are more difficult to perform than should be necessary.

Demonstrational interfaces allow the user to perform actions on concrete example objects (often, by direct manipulation), while constructing an abstract program. This can allow the user to create parameterized procedures and objects without requiring the user to learn a programming language. The term "demonstrational" is used because the user is demonstrating the desired result using example values.

For instance, suppose a user wants to delete all the ".ps" files from a directory. If the user dragged a file named "xyz.ps" to the trash can, and then a file named "abcd.ps", a demonstrational system might notice that a similar action was performed twice, and pop up a window asking if the system should go ahead and delete the rest of the files that end in ".ps".

In addition to providing programming features, demonstrational interfaces can also make direct manipulation interfaces easier to use. For example, if the system guesses the operation that the user is going to do next based on the previous actions, the user might not have to perform it.

There are two ways that demonstration can be used in user interfaces. Some demonstrational interfaces use inferencing, in which the system guesses the generalization from the examples using heuristics. Other systems do not try to infer the generalizations, and therefore require the user to explicitly tell the system what properties of the examples should be generalized. One way to distinguish between an inferencing and non-inferencing system is that a system that guesses can propose an incorrect action even when the user makes no mistakes, whereas a non-inferencing system will always do the correct action if the user is correct (assuming there are no bugs in the software, of course).

The use of inferencing comes under the general category of Intelligent Interfaces. This refers to any user interface that has some "intelligent" or AI component. This includes demonstrational interfaces with inferencing, but also other interfaces such as those using natural language.

Some demonstrational interfaces do not provide full programming. A program is usually defined as a sequence of instructions that are executed by a computer, so any system that executes the user's actions can be considered programmable. However, for the purposes of this paper, it is useful to characterize how programmable the systems are. Therefore, the term programmable will be used for systems that include the ability to handle variables, conditionals and iteration. Note that it is not sufficient for the interface to be used for entering or defining programs, since this would include all text editors. The interface itself must be programmable.

Demonstrational interfaces that provide programming capabilities are called example-based programming systems [Myers 90a]. Interfaces that provide both programming and inferencing are called programming-by-example systems, which come under the topic of "automatic programming." Programming-with-example systems, however, require the programmer to specify everything about the program (there is no inferencing involved), but the programmer can work out the program on a specific example. The system executes the programmer's commands normally, but remembers them for later re-use. Example-based programming can be differentiated from conventional testing and debugging because the examples are used during the development of the code, not just after the code is completed. (Note that other authors in this book define programming-by-example to include all example-based programming systems or even all demonstrational interfaces, as discussed in Appendix C.)

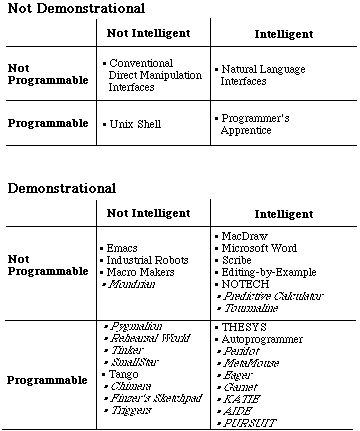

Figure 1. A taxonomy of interfaces. The systems in italics are presented in other chapters of this book.

Naturally, these distinctions are more like continuums rather than dichotomies, and different people can argue about whether various systems are intelligent or programmable or not, but I think these are useful criteria with which to look at systems. Figure 1 shows my view of how a number of systems are classified using the definitions above.

In some cases, the abstractions could be provided to the user, but most people are much better at dealing with specific examples than with abstract ideas. Fewer errors are made when working out a problem on an example as compared to performing the same operation in the abstract [Smith 81]. Therefore, demonstrational interfaces may be easier to learn and understand than an interface that requires the user to think abstractly.

Demonstrational techniques can be used to provide these programming capabilities without requiring the user to learn a programming language. With a demonstrational interface, the user operates in the normal interface of the system, but in addition to performing their usual function, the operations can also cause a more general program or template to be created. Variables, loops, conditionals and other programming features can be added to the generated scripts explicitly by the user or automatically by the system using inferencing. The result can be used in multiple contexts. This technique has been successfully demonstrated in many systems described in this book.

Users will find it easier to create procedures this way than by learning a programming language and writing programs. It is well known that learning to program is very difficult for most people. Even the basic concepts of programming, such as representing the problem to be solved as a sequence of steps, can be hard to learn. However, demonstrational programming overcomes these difficulties because the user interactively creates a solution to a concrete problem, rather than working abstractly off-line. Typically, with demonstrational systems, the users operate in the user interface that they are familiar with, and there is no language syntax to learn.

Some systems have added inferencing to this macro mechanism, so the system tries to guess the parameterization of the macro, where loops are appropriate, or even when a macro might be useful. This has been successful in limited domains, such as creating drawings and animations.

In addition to helping the user avoid repetitive actions, demonstrational techniques can also be used to infer semantic, high-level properties of the objects. Most direct manipulation systems provide the user with a small number of simple and direct operations, out of which the desired high-level effects can be constructed. However, if the system can infer what the user's high level intentions are while performing a composite operation, it can save the user from having to perform a number of steps. For example, drawing packages allow the user to change the position and size of individual objects or groups of objects. However, there are rarely tools that help with higher-level effects like getting objects to be evenly spaced. A demonstrational system might watch the user as the first few objects were moved, and automatically infer this high-level property.

Another example of inferencing for semantic properties is the determination of the appropriate "constraints" that should be applied to objects. Constraints are relationships among objects that are maintained by the system. For example, if an architecture system can infer that the windows being placed are on the second floor of a building, then it can automatically insure that they stay between the floor and ceiling of that floor, even if that floor were edited to be in a different place.

In most cases, it would be possible to provide the user with a command that performed the same action that the system infers from the demonstration. However, the advantages of providing inferencing instead of extra commands are that:

It is clear that demonstrational interfaces will sometimes be harder to use, because the user may know exactly the name of the relationship that is desired, so it might be easier to select it from a menu rather than demonstrate it by example. For example, to demonstrate that a value is supposed to toggle when an action occurs, you need to demonstrate the behavior twice: one when it was originally on, and once when it was originally off. Otherwise, the toggle case cannot be distinguished from always going on or always going off. This will probably be more time-consuming than choosing from "toggle," "set," and "clear" in a menu. Clearly, care must be taken to only use demonstrational techniques when appropriate or to allow the user multiple ways of providing the same commands.

As mentioned earlier, some demonstrational systems attempt to infer the generalization from the examples. For instance, some systems try to guess when to perform a previous operation again. This can cause the system to be less predictable to users. In addition, any system that guesses will sometimes be wrong. Besides performing the wrong operation, a bad guess causes a number of other problems. First, the system must provide appropriate feedback to the user about what it is doing, so the user can find out when it is wrong. This feedback can be intrusive and can slow down the normal operation. (Feedback in demonstrational interfaces is discussed at length below.) Second, the users must have a mechanism to abort or undo the inferences that are incorrect. This can make the interfaces much more difficult to implement. Third, users may be uncomfortable with an interface that may occasionally do the wrong thing. Even if their overall productivity is higher, an occasional error may undermine their willingness to use the system (although, to some extent, appropriate feedback can help provide users with a feeling that they know what the system is doing and that they control it). Finally, if an error is undetected by the user, the system will create an erroneous procedure. Of course, studies have shown that user-generated procedures for spreadsheets often contain errors [Brown 87]. Therefore, spreadsheet users must test and debug the procedures they write. This has not deterred typical spreadsheet users from writing procedures. The same will probably apply for procedures that are generated from examples.

Some other problems are discussed later as research issues.

A number of readers have objected to applying the term "guess" to computers. For example, on a panel at the CHI'91 conference, Ben Shneiderman claimed that since computers execute algorithms which are deterministic, this term is inappropriate [Myers 91c]. I am using the term for systems which use heuristics, since it appears to the users that the system is guessing their intent. Any system with heuristics will sometimes generate an incorrect result. To the users, this will result in a significantly different style of interfaces than conventional ones where the computer is always "right." Under this definition, most artificial intelligence systems guess, as do pattern recognition systems like character recognizers.

There is a separate argument as to whether it is appropriate for computers to use heuristic algorithms (to guess). In the same panel, Ben Shneiderman argued that "If the computer performs complex inferences then the users lose control, predictability can vanish, and the risk of uncertainty increases." However, this and other chapters in this book show that heuristics can provide significant facilities to users that might not be achievable any other way, and that designers should not be afraid to make use of them. Proper attention to the feedback presented to the user can overcome the problems that Shneiderman mentions.

It is probably easier to appreciate the differences between demonstrational and conventional direct manipulation interfaces by seeing a videotape of a demonstrational system in action. Videos of a number of the systems mentioned in this book, including SmallStar, Peridot, Metamouse, Tinker, Chimera, and Eager, are available as SIGGRAPH Video Reviews (to order, contact ACM SIGGRAPH, c/o First Priority, PO Box 576, Itasca, Ill, 60143, (800) 523-5503).

Systems that are not demonstrational, programmable or intelligent are the conventional user interfaces, including non-programmable command lines and most direct manipulation systems. The intelligent, non-demonstrational, non-programmable interfaces include most of the systems developed by AI researchers, including natural language understanding, intelligent help, etc. All of the conventional systems that allow the user to program in the interface are included in the category of programmable, non-intelligent interfaces. This includes the Unix Shell and the programming language embedded in Emacs (called MockLisp in some versions). A system that can be classified as intelligent, programmable, and not demonstrational is the Programmer's Apprentice [Rich 88], which uses AI to help the user create programs.'

Systems that save the keystrokes that the user types and will replay them later are also classified as demonstrational, not programmable, and not intelligent. The most famous example of this is in the Emacs text editor [Stallman 87]. Here, the user goes into program mode, gives a number of commands in the usual way, and then leaves program mode. The commands execute normally while the macro is being created, so the user only has to learn three new commands to use macros: Start-Recording, Stop-Recording and Execute Macro, since the rest of the commands are the ones that are used every day while editing. To replay the macro, the user moves the cursor to the appropriate new place and gives the Execute Macro command. Many spreadsheet programs also have the ability to record the user's keystrokes to construct a macro as the user is seeing the results of the macro executed on example data.

Simple transcripting like this has also been used in mouse-based programs for the Macintosh user interface, such as MacroMaker from Apple. Unfortunately, it is less successful here than in text editors or robots because the transcripting programs are not tied to a particular application and therefore can only save raw mouse and keyboard events. For example, the transcript will save information such as "the mouse button went down at location (23,456)". The fact that there was a particular icon at that location may not be recorded. If the windows and icons move after the macro is created, the locations saved may not correspond to the correct objects, so the macros will not work correctly. Some programs, such as Tempo II from Affinity MicroSystems and QuicKeys from CE Software, make a transcript of somewhat higher-level commands, but in general, it is necessary to have specific high-level knowledge about the application being run to make transcripting useful [Cohn 88]. David Kosbie's system (Chapter 22) tries to overcome these problems.

Mondrian (Chapter 16) is classified as non-programmable and non-intelligent since it does not currently incorporate loops, conditionals, or inferencing.

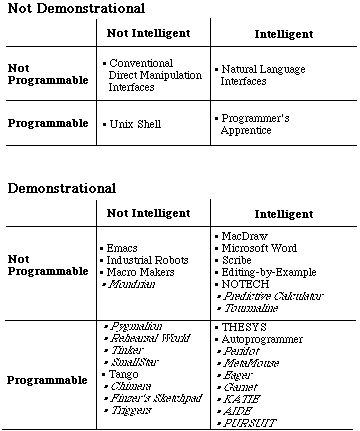

There

are a number of popular systems that use inferencing (heuristics) in very

simple ways. For example, the Macintosh programs Adobe Illustrator and Claris

MacDraw remember the transformations used on graphic objects after a

"Duplicate" operation and guess that the user wants the same transformations

for new objects, so they are applied automatically (see Figure 2). This may be

an incorrect guess, and the new object may even appear off the screen (if the

initial transformation was large), but most of the time this feature is useful

to the users.

III. Research Issues

A. Vision of End user environment

1. Integration of information

3. Malleable Interfaces

b. End user programming

i) Forms for user programs

ii) new user objects are first class objects

iii) Two benefits

Sharing of user created objects

a. Integrating applications

c. customization of the environment

2. Shared interfaces (CSCW)

Vision of new tools

B. Vision of interface development environment

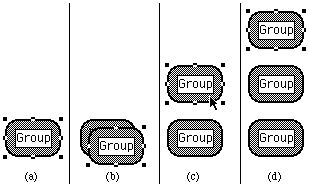

(a)

III. Research Issues

A. Vision of End user environment

1. Integration of information

2. Malleable Interfaces

a. End user programming

i. Forms for user programs

ii. new user objects are first class objects

iii. Two benefits

iv. Sharing of user created objects

b. Integrating applications

c. customization of the environment

3. Shared interfaces (CSCW)

B. Vision of new tools

C. Vision of interface development environment

(b)

In Microsoft Word 4.0, the "Renumber" command will look at the first paragraph in the selection and see if there is some form of number there. If so, it will use the formatting of that number to determine how the numbers at the beginning of all the paragraphs should look (see Figure 3). For example, if the paragraph starts with "3) " it will guess that paragraphs should be numbered using numerals followed by a parenthesis. It will also guess multi-level numbering, so if the example has roman numerals on main paragraphs and letters on indented paragraphs, it will renumber all the levels appropriately. Microsoft Word will guess wrong in some cases, however. For example, in Figure 3, Word has changed the punctuation after the lower case roman numerals from parentheses to periods, which is probably not what the user had in mind.

Another small use of inferencing from an example is the formatting for the date in the Scribe text formatter [Reid 85]. When specifying the way the date should be printed, the user can supply an example in "nearly any notation" for the particular date March 8, 1952, and Scribe will convert this for use with the current date. For example, formats like the following are accepted for specifying how the current date should be printed:

@Style(Date="03/08/52")

@Style(Date="March 8, 1952")

@Style(Date="Saturday, 8th of March")

@Style(Date="8-Mar-52")

@Style(Date="the eighth of March, nineteen fifty-two")

Another use of heuristics in commercial products is that when you ask for a chart or plot in many spreadsheet packages, the systems will try to guess what kind would be best based on the form of the data.

Among research systems, "Editing by Example" (EBE) [Nix 85] uses demonstrational techniques to create transformations of the text (i.e., replace this with that). The system compares two or more examples of the input and resulting output of a sequence of editing operations in order to deduce what are variables and what are constants. The correct programs usually can be generated given only two or three examples, and there are heuristics to generate programs from single examples. For instance, the system can deduce a program to convert all occurrences of @i(<text>) to {\sl <text>} from editing @i(O.K.) to {\sl O.K.} and then editing @i(Boston Post) to {\sl Boston Post}. The generated program can then be run on other parts of the document. Unlike the Emacs macros, however, EBE uses the results of the text editing; not the particular editing operations used. The primary inferencing here is to differentiate variables from constants.

The NOTECH text formatter from Princeton University allows users to type documents in plain text, with no formatting commands, and tries to infer the appropriate formatting from the spacing and contents of the document to produce attractive laser-printer output [Lipton 90]. For example, a single line is assumed to be a header, a group of short pieces of text separated by tabs are assumed to be a table, and text that contains Pascal or C statements is formatted as code. NOTECH is a pre-processor for TEX and uses a set of rules to parse the input.

Witten's predictive calculator (Chapter 3) is classified here because it tries to infer the next step from the example input, but it does not support loops or conditionals.

Tourmaline (Chapter 14) allows the user to create text formatting macros by example. The "Styles" supplied by formatters like Microsoft Word only look at the first character of the selection, so they will not capture the formatting information of complex chapter headings with many different formats. Tourmaline, however, allows the user to enter that heading, select it, and create a style from it.

The seminal system that used demonstrational techniques for general-purpose programming is Pygmalion (Chapter 1), which supported programming using icons. Pygmalion, which was developed in 1975, did not use inferencing. The user moved icons around to demonstrate to the system how a computation should be carried out.

In Rehearsal World (Chapter 4), the user writes programs by placing objects on the screen, and then showing the dependencies between objects. Each object has code attached to it, and the user can demonstrate the behavior of the code by clicking on an "eye" icon next to the code, and then showing what should happen. There is no inferencing.

Tinker (Chapter 2) allows the user to choose a concrete example first, and then type in code which is immediately executed on that example. For conditionals, Tinker requires the user to demonstrate the code on two examples: one that will show each branch. Tinker supports iteration through recursion, but it does not try to infer the program from the example: the user enters the code directly.

SmallStar (Chapter 5) allows the end user to program a prototype version of the Star office workstation desktop. (Note that although Halbert calls SmallStar "Programming by Example," since the system does not use inferencing (heuristics), I label it programming with examples instead.) The desktop, sometimes called the "Visual Shell," is an iconic interface that supports file creation, deletion, moving, copying, printing, filing in directories (called folders), etc. [Smith 81]. When programming with SmallStar, the user enters program mode, performs the operations that are to be remembered, and then leaves program mode. The operations are executed in the actual user interface of the system, which the user already knows. A textual representation of the actions is generated, which the user can edit to differentiate constants from variables and explicitly add control structures such as loops and conditionals.

A "program visualization" system allows the user to view the execution of a computer program using pictures [Myers 90a]. A number of systems have allowed the user to specify the desired picture by demonstration. For instance, the Tango system [Stasko 91] allows the user to draw example pictures of the objects to appear in the animation, and then attach the various properties of the objects to appropriate program variables and actions.

Chimera (Chapter 12) is classified as not intelligent because the primary programming part (the editable graphical histories) does not use inferencing. If you include the inferred constraints from multiple snapshots, then it would move to the "intelligent" column.

Finzer's SketchPad system (Chapter 13) (not to be confused with Sutherland's famous system of the same name from 1963) is classified as programmable because it does support recursion and conditionals for the associated stopping criteria. Triggers (Chapter 17) contains mechanisms for conditionals, and it can be set up to repeatedly execute pieces of code.

One of the first systems of this type was the "Trainable Turing Machine" [Biermann 72] in which the user supplied examples of the Turing machines' input, output and head movements during a computation, and the system would algorithmically create its own finite state controller that handled a class of "similar" computations. THESYS is another automatic programming system that attempted to infer programs from multiple examples of the input and resulting output lists [Summers 77]. A similar system [Shaw 75] tried to generate Lisp programs from examples of input/output pairs, such as (A B C D) ==> (D D C C B B A A). It is clear that systems such as these are not likely to generate the correct program. In general, induction of complex functions from input/output is intractable [Angluin 83].

Another approach, exemplified by Autoprogrammer [Biermann 76b], attempts to infer general programs using examples of traces of the program execution. The user gives all the steps of one or more passes through the execution of the procedure on sample data. Then, the system tries to determine where loops and conditionals should go, as well as which values should be constants and which should be variables. The authors hoped that this would expand the class of programs that could be automatically inferred, but it was not very successful for general-purpose programming, because the system often guessed wrong and users needed to study the generated programs to see if they were correct.

The use of inferencing has been more successful when applied to highly-constrained special-purpose areas. For example, the Peridot system (Chapter 6) allows a designer to create user interface components such as menus, scroll bars, and buttons with a graphical editor. Example values for such things as the items in the menu and the position of the scroll bar indicator allow the interfaces to be constructed by direct manipulation. Peridot uses inferencing to guess how the various graphic elements depend on the example parameters (for example, that the menu's border should be big enough for all the strings), to guess when an iteration is needed (for example, to place the rest of the items of a menu after the positions for two have been demonstrated), and to guess how the mouse should control the interface (for example, to move the indicator in the scroll bar).

Metamouse (Chapter 7) is also based on a graphical editor, but it watches as the user creates and edits pictures in a 2D click-and-drag drafting package, and will try to generalize from the actions to create a general graphical procedure. If the user appears to be performing the same edits again, the system will perform the rest of them automatically. Inferencing is used to identify geometric constraints in editing operations, to determine where conditionals and loops are appropriate, and to differentiate variables from constants.

Eager (Chapter 9) is a "smart macro" tool for the HyperCard environment. HyperCard is a Macintosh program that allows users to build custom, direct-manipulation applications. Like Metamouse, Eager infers an iterative program to complete a task after the user has performed the first two or three iterations. Although it does not handle conditionals explicitly, it does use them as stopping conditions for iterations, so Eager is classified as programmable. An innovation in Eager is its technique for providing feedback to the user about how the system has generalized the user's actions. Eager provides feedback by anticipation: when it recognizes a pattern, it infers what the user's next action will be, and uses color to highlight the location where the user will next click the mouse (e.g. a menu item, or a button on the screen) or to display the text that the user is expected to type next. Most other systems (e.g. Peridot and Metamouse) explain in text what the user is expected to do. If the user performs the anticipated action, this confirms the inference; if the user performs a different action, this provides a new example for Eager to use in determining a better inference about how to generalize the user's actions.

The various tools in Garnet (Chapter 10) extend the ideas in Peridot to allow demonstration of other parts of the user interface.

KATIE (Chapter 22) and AIDE (Chapter 18) are architectures for extending macro recorders with application-specific information so that the macros will be more meaningful. They incorporate automatic inferencing of loops and conditionals. PURSUIT (Chapter 20) allows end users to create programs in a visual shell (the user interface to a file system, sometimes called a "desktop" or "finder"). It uses a graphical comic-strip representation for the programs (inspired by Chimera), and does some limited inferencing of where loops and conditionals should be.

If inferencing was added to these systems, then the system could guess how to generalize from the example pictures to the actual data. For instance, there are many different ways to display a binary tree. The system could allow the user to draw a sample display, and use the example to choose which type of tree display is desired, and the appropriate parameters for that display. The user would then not have to understand the meanings of the various layout options and parameters.

Adding inferencing to macro recorders might allow the systems to guess where the variables and control structures should be, without requiring the user to specify them. The Eager system shows that iteration can be guessed successfully. Instead of requiring the user to select the example object and explicitly generalize it, the system might watch two example operations, notice that a different object was used, and create a variable automatically. The example of deleting ".ps" files was given in the introduction.

Future research should be directed at inferring graphical properties from the example pictures. One common problem with simple drawing packages like Macintosh MacDraw is that there is no mechanism for specifying how the graphical objects relate to one another. For example, a line may be drawn to a box, but it will not stay attached if the box is moved. Other drawing packages including Sketchpad [Sutherland 63] and Juno [Nelson 85], have addressed this problem by allowing the user to specify constraints on the objects. Unfortunately, users often have a difficult time correctly specifying and understanding constraints. Peridot demonstrated that it is possible for the system to successfully guess graphical constraints on objects if the type of drawing is known to the system. This technique could be used in more general drawing packages so that parts of the pictures could be edited and still retain important properties. Reportedly, the new IntelliDraw drawing program from Aldus does this. Another advantage to the demonstrational approach is that the constraints are guaranteed to correctly create the example picture, whereas when constraints are explicitly applied, the user can accidentally set up a situation where there is no possible solution to the constraints.

Inferencing could also be used to help users create the objects neatly. In many direct manipulation interfaces, certain points attract the cursor when it is moved near them, which makes it easier to create the desired pictures. For example, if the cursor is close to the end point of a line, it will jump to be exactly on the end point (as if attracted by gravity). This idea can be extended to provide inferred gravity points. For example, if the user has drawn two objects a certain distance apart, the system could insert a gravity point at the same distance from the second object, in case a third is desired. In a similar way, the positions and sizes for alignment lines and circles, as used in computerized "ruler" and "compass" tools, such as the Snap-Dragging system [Bier 86], might also be inferred from the drawing. The Briar system [Gleicher 92] uses techniques like this to construct objects which can then be manipulated while retaining their relationships.

The Peridot system was discussed above, and there are many others. Lapidary (Chapter 10) allows application-specific graphical objects to be created by demonstration without programming. For example, the designer can draw examples of the boxes and arrows that will be the nodes and arcs in a graph editor. The goal of Lapidary is to allow all graphical aspects of the program to be drawn using the mouse, and to allow most aspects of the behavior of the interface to be defined by demonstration without programming. Lapidary does not use any inferencing.

Druid [Singh 90] tries to guess the alignment of pre-defined widgets (such as buttons and menus) when they are placed in a user interface. It also allows the designer to demonstrate the sequence of end user actions (e.g., after the user hits this button, grey out that button and pop up this dialog box).

The Mike user interface management system [Olsen 88] allows macros to be specified by example for a wide range of user interfaces. It does not use inferencing. As the macro is being created, a variable reference can be inserted by adding a special parameter and then referring to the parameter instead of to an actual object. The system will then prompt the user to give an example value for that parameter to be used in the rest of the demonstration. Control structures can be explicitly added to the macro by first selecting the type of iteration or conditional from a menu, and then demonstrating the statements which go inside the control structure.

Demonstrational techniques might make spreadsheet programming easier, by automatically determining which cell references should be variables and which should be constants when constructing macros or when copying formulas from one cell to another.

One interesting idea is to investigate whether demonstrational techniques can be used when the user needs information about how to achieve a certain task. Current help systems are good at explaining what a command will do, but very poor when the user has a task in mind but does not know how to achieve it. For example, the user may want to sort some items, or move a row of objects down. It might be possible for the user to perform a few low level actions that embody some of the required steps, and the system could try to guess the remaining commands that would be appropriate.

There are many other possible applications of this technology, including creation of educational software, creating animations, etc. Further research is needed to determine when demonstrational techniques can be profitably applied.

For example, experiments showed that experienced Star users who were not programmers were able to create moderately complex programs using SmallStar (Chapter 5). Peridot (Chapter 6) was tested on five non-programmers and five programmers, and all were able to create user interface elements such as menus after about an hour and a half of instruction. Creating a menu with a custom appearance took them about 15 minutes, whereas it took the most experienced programmers between one and eight hours to code using conventional programming languages [Myers 88]. Users of Peridot did not seem to mind that the system occasionally guessed incorrectly.

The Eager system (Chapter 9) was also tested with users. The system usually guessed the correct loops in the users' programs, but users were nervous to let the system go ahead and do the rest of the iterations, because they were not confident that the system would do the correct thing. A particular problem was that the stopping criterion was not made apparent to the users [Cypher 91a].

Much more work is needed to evaluate when and how to use demonstrational interfaces, and how to provide feedback and other aides so users are comfortable with them.

Even if the generated procedure seems to operate correctly on the example data, it may not work for other important cases. It will be interesting to see whether or not procedures that are demonstrated turn out to be sufficiently reliable in practice.

One idea is to have the system replay the demonstration like a movie, showing the actions that the user performed one at a time, and then the user can stop the replay at any point to specify differences. The edits can make the rest of the procedure invalid, however, so the system will have to be careful about allowing replay to continue after the edit. In some situations, the procedure will have potentially damaging side effects (such as deleting files), so a naive replay is not appropriate. Therefore, some of the operations will have to be simulated or not carried out. In general, editing in demonstrational interfaces is an area that needs significant further study.

For help with this paper, I want to thank Ben Shneiderman, Francesmary Modugno, David Maulsby, Richard Potter, Bernita Myers, Brad Vander Zanden, Andrew Mickish, and the editors of this book.

Table of Contents

Table of Contents  Watch What I Do

Watch What I Do